In the digital era, speed defines experience. Whether it’s a streaming platform buffering your favourite series or an e-commerce site taking too long to load, even a few seconds can decide whether a user stays or leaves. Traditional cloud-based applications, though powerful, often struggle with the sheer distance between users and central servers. This is where edge computing steps in—like moving a small café closer to a busy neighbourhood instead of expecting everyone to travel to the city centre for coffee.

Edge computing brings data processing closer to the user, ensuring faster response times, reduced latency, and a smoother application experience.

Understanding the Shift from Cloud to Edge

The traditional cloud model works like a massive library—centralised, well-organised, but far from many of its readers. Every time a user makes a request, data must travel to and from these central data hubs, creating delays.

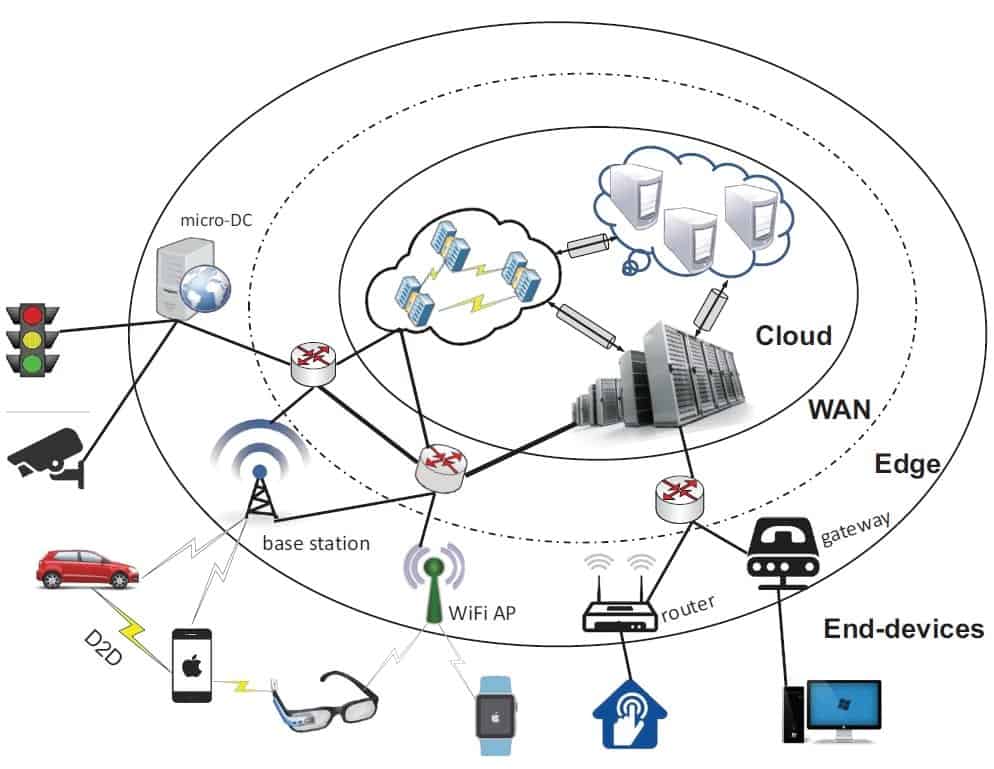

Edge computing flips this model by decentralising operations. It places micro data centres—often called edge nodes—closer to users. These nodes handle computations locally and only send essential data to the central cloud for storage or further analysis.

Developers learning through a full stack Java developer training often explore this architecture, as it’s reshaping how web applications handle large user bases with minimal delay.

The Role of CDNs in Edge Application Delivery

Content Delivery Networks (CDNs) were the first major step toward edge computing. CDNs cache copies of static files like images, stylesheets, and scripts at multiple geographic locations. When a user visits a website, these resources are delivered from the nearest CDN node, cutting down the time data travels.

However, modern edge computing extends beyond content caching—it enables logic execution at the edge. With serverless edge functions, developers can run parts of their applications (like authentication, personalisation, or data filtering) right on the CDN edge node.

This not only reduces latency but also enhances resilience—if one region goes down, another picks up instantly, ensuring an uninterrupted user experience.

Edge Functions: Logic on the Move

Think of edge functions as “pop-up offices” for your applications. Instead of forcing every process to route back to headquarters (the central cloud), these functions can execute small workloads closer to the customer.

For example, an online gaming platform can validate player sessions at the nearest edge node, ensuring rapid responses and a fair playing field. Similarly, e-commerce sites can offer location-based promotions in real time without pinging central servers.

These advancements are transforming how developers design systems—prioritising decentralisation, scalability, and speed. Those engaged in full stack Java developer training often experiment with these architectures to understand the future of application delivery and real-time responsiveness.

Security and Reliability at the Edge

Processing data closer to users introduces new challenges, especially concerning security and governance. Each edge node must be protected to prevent vulnerabilities that could expose user data or system logic.

Fortunately, modern edge platforms include built-in encryption, identity management, and zero-trust frameworks to ensure security consistency across distributed environments. Additionally, redundancy ensures that if one edge node fails, traffic seamlessly reroutes to another, maintaining availability.

This decentralised model mimics biological systems—if one neuron fails, the brain compensates. Edge networks work in the same way, guaranteeing reliability through distributed intelligence.

The Future of Edge Computing and Application Delivery

Edge computing represents more than a technological evolution—it’s a philosophical shift toward responsiveness and proximity. As 5G, IoT, and AI continue to advance, the edge will become the new frontier for innovation, where data isn’t just stored but understood instantly.

Developers will increasingly adopt architectures that blend cloud scalability with edge immediacy. The result? Applications that feel instantaneous, adaptive, and intelligent.

Conclusion

Edge computing brings computation, intelligence, and security closer to users, reducing latency and enhancing reliability. It’s no longer just about where data lives—it’s about where it thinks.

For developers and engineers looking to drive this transformation, it is essential to learn how to design, deploy, and optimise applications for edge environments. Gaining hands-on experience with frameworks can help bridge the gap between traditional cloud concepts and modern distributed architectures.

Just as cities thrive when essential services move closer to communities, digital ecosystems flourish when computation moves closer to users—the edge is where the future unfolds.